Incorporating product management into machine learning

ML in recommender systems #5

In this fifth post we will look at how to structure the metrics and machine learning (ML) objectives of a personalized recommendation system in order to achieve different product goals. There is a misconception among many industry practitioners that an ML approach reduces the role of product teams since using ML implies not using product driven heuristics. I would like to show that this is not the case. An ML approach is merely one lever to capture the product goals.

One has to start with the outcomes desired by product and work out objective of the recommendation system from those.

Case study: objectives of a content recommendation system

You are building a personalized content recommendation system. A good way to think about your goals are along three axes:

1) Engagement: You might want to maximize the click-through rate of the recommended content for instance. This is a sort of immediate reward, that measures the instantaneous reward to the user from the recommendation. Practically, most recommendation systems would use more down-the-funnel engagement metrics that capture true intent beyond CTR.

Fig 1: Click-through rate is one prelim measure of engagement for the recommended content

2) Satisfaction: Even if you are doing great with getting users to 'engage' with your content, how do you know that they are satisfied? You should try to measure the satisfaction of your users with the content. One common way to do this is through surveys to collect ground truth.

Fig 2: Users may be surveyed with items that the recommender system would have served them on the home page to see their satisfaction with the content.

3) Responsibility: This is about making sure that you are not showing inappropriate or unsafe content, and not recommending misinformation for instance. A lot of users consider recommended items as sort of blessed by the source. Hence you should be extra careful to measure the trust and safety aspects of your recommendations. Apart from surveys, another way to measure this might be a UI element for users to cross-out a recommendation, and then you could ask them the reason for it.

3 axes of objectives for a recommendation system are: Engagement, Satisfaction, Responsibility. An ideal system should be able to blend these into one ensemble model.

Designing objectives of the ranking system for the above metrics

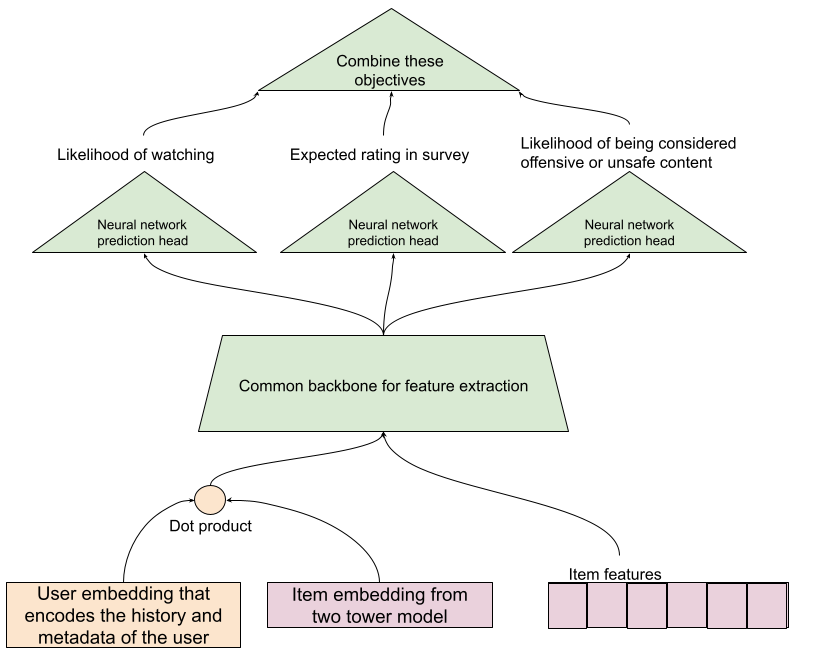

Fig 3: Once you have figured out the objectives that align with your metrics, make a trainable model architecture that learns from the different sources of user feedback. Here we are trying to predict three objectives but instead of have three entirely different models we have a common feature extraction neural network below them. For each item this model will produce an estimate of the three objectives at serving time.

As product goals change, the metrics and ML objectives evolve

Example 1: What if the product team wants to maximize the total time spent and not the total number of videos watched? "Ranking by click-through rate often promotes deceptive videos that the user does not complete (“clickbait”) whereas watch time better captures engagement." These are excellent references on this topic: [Youtube Now::Why we focus on watch time], [Beyond clicks, Dwell time for personalization], [Deep neural networks for youtube recommendations]

Example 2: Now suppose you get feedback from your users that the recommendations are not diverse enough. Let's see how to measure and respond to it in more detail below.

Page level metrics vs item level metrics

When we talk about an engagement metric like click through rate (CTR), we could measure this per item or for the entire page/slate of recommendations.

An item level CTR would measure the observed probability of users clicking per item presented to them. However a page level CTR would measure the probability that the user found something useful in the entire page.

Fig 4: Showing how item level and page level metrics capture different things.

Primarily maximizing item-level CTR might lead you to produce recommendations that are mostly around the topic the user is most interested in but under represent the secondary interests of the user. Page level CTR appears to correlate better to the user's finding something useful. However only looking at page level CTR might lead you to miss out on some headroom in your ranking model.

Designing a recommendation system to enhance diversity

Fig 5: How to change ranking and display to maximize the page level metrics discussed above.

Optimizing for the long term

So far we have been measuring the goodness of a slate of recommendations mostly based on immediate actions of the user. However, the goals of the product are to help users along the activation to retention funnel and keep coming back to the service. The business thus cares about long term effects like how often is the user coming back. How do we handle that?

The idea will be to measure and train for the same. For instance, if we have two items with say roughly similar probability of being liked by the user, but one of the, is the start of a long series and the other is a one time content, we want to recommend the one from the series first. This way there is a higher likelihood of the user coming back again.

Fig 6: The value of a recommendation to the business is not just related to the current probability of the user watching a video but also future watches that are made more likely due to the recommendation. (Note that the images above have been used for illustrative purposes, and we are not claiming any information about the titles in question.)

Metrics

Long term metrics could be the total time spent by users on the platform consuming content, the average number of times a user returns to the platform in a month (a.k.a. L28)

Objectives

In this paper and video presentation, the authors have used a reinforcement learning approach to find a recommendation policy that maximizes not the probability of an instantaneous click but the total time watched in the next 8 hours or so. Note that the authors mention that this was one of the biggest launches in Youtube in the last two years!

However, one simple way to think about this problem is that so far we have been ranking items based on the probability of a click. What we were to rank based on a sum of probability of click and the presence of extremely related content. For the second part we just need to make a model that given a source item, returns content that is very likely to be watched after the source item is watched.

Total Value of recommending an item = Likelihood of the user clicking on the item + Prob Click ( user, item ) * Expected time of watching other items that are usually watched after watching this item.

We already have a model for the first part. The model for the second part is usually developed separately as a "WatchNext" model. This is a good research paper on this topic. While this paper describes a personalized WatchNext model, a user agnostic WatchNext model fits well in this setup as well. If the model is unpersonalized we could compute this second term offline, store it in a key-value table keyed with the recommended item and just load up that value as feature of the recommended video at serving time.

Conclusion

We looked at how to start with the goals of the product and use those to design the machine learning (ML) based recommender system. First, we identified performance metrics that represent the goals appropriately. Then we designed the learning objectives of the ML model to be in sync with these metrics. The objectives can be both short-term or long-term.

This was originally posted on Linkedin.

Disclaimer: These are my personal opinions only. Any assumptions, opinions stated here are mine and not representative of my current or any prior employer(s).

Thanks Gaurav for this amazing blog.

I wish you are able to provide resources to repos implementing these insights!