Logging | How to build self-learning search and recommender systems

Server side and client side logging needed to build recommender systems, ads and search systems

Let’s look at why logging is important in recommender / search / ads systems and what to log, how to log, and what to do after logging.

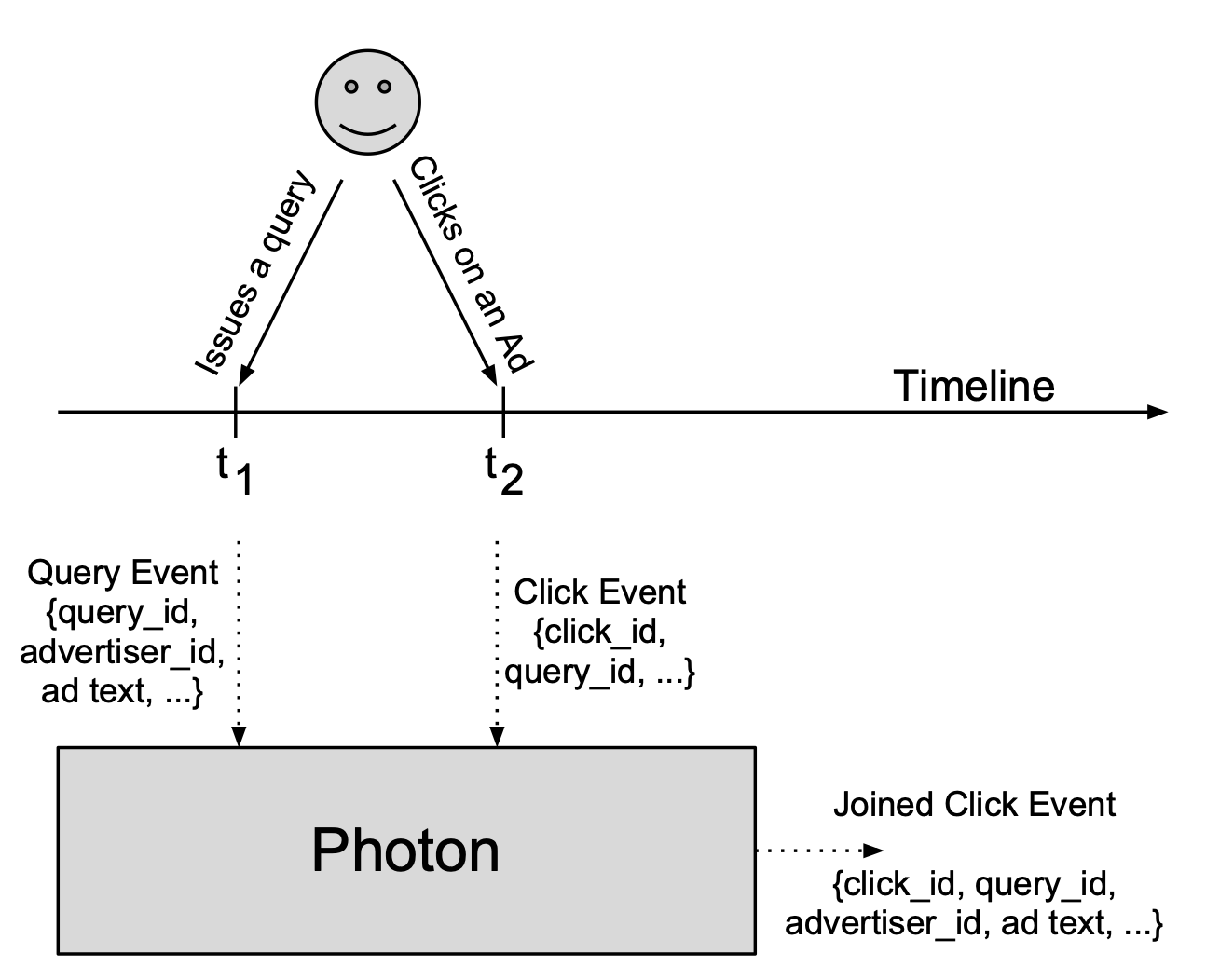

To whet your appetite, and to get a gist of the article, see the image above from Google’s Photon paper. It shows why logging is absolutely critical for Google ads.

Why is logging important?

Accounting / Attribution : The Photon paper above, for instance, is motivated by a core business problem of showing advertisers what queries their ads were shown in and in which ones they were clicked. In search systems, such logging enables us to find the queries we are not doing well on, the ones where the top results need improvement.

Ranking improvements : The operative word of machine learning is “learning”. For the (result) ranking model to improve it needs to improve upon usage. See section below for details.

Launch decisions : To be able to see what is working and which experiments are launch worthy.

In this article we will focus on ranking without loss of generality to other use cases.

What’s a ranking model and why do we need logging to train it?

Modern ML systems are dynamical systems, they are constantly learning and changing. Scalable feature and feedback logging is a means to support that learning.

Most successful recommender systems today have a two-stage process (see Fig 2 above). Retrieval refers to the pink boxes that are in parallel trying to get the results that could be relevant. Ranking refers to the light green boxes in the bottom right that are trying to order the 100 or so retrieved results at serving time.

Schematic of what to log server side and client-side

message Query {

string query_id = 1;

string query_text = 2;

}The search / recommendation query should provide a query id.

import "result_features.proto";

// ^ the features used by the ranking model

message QueryResultInternal {

// a unique identifier for the item being

// returned. result_id does not depend on

// the query.

string result_id = 1;

// url below is being used generally to denote

// what the user is looking for.

string url = 2;

// ranking features

ResultFeatures features = 3;

// ranking outputs, i.e. estimates of objectives

// being optimized.

ResultPropensities propensities = 4;

}

message QueryResponseInternal {

// same query id as was provided in the request

string query_id = 1;

repeated QueryResultInternal results = 2;

}The ranking module should send results with the features that were used by the ranking model to compute the ranking scores. These are logged by the server-side logging module.

message QueryResult {

// a unique identifier for the item being

// returned. result_id does not depend on

// the query.

string result_id = 1;

// url below is being used generally to denote

// what the user is looking for.

string url = 2;

}

message QueryResponse {

// same query id as was provided in the request

string query_id = 1;

repeated QueryResult results = 2;

}The server-side logging module, would then strip out the features since they are not needed by the client and also for privacy and security concerns. The results sent to the client would only contain ids and what is needed to show them to the client.

message Click {

// same query id as was provided in the response

string query_id = 1;

// a unique identifier for the item being clicked

string result_id = 2;

}The client-side logger would only need to log a click event with the original query id and the result that was clicked.

Joining the three above we can produce the required training data.

Let’s assume the user clicked on the third result. A positive example can thus be made from the query_text and the ranking features of the result_id corresponding to the Click event.

Results above what was clicked can be assumed to have been considered but not clicked. From these we could generate negative examples. These would similarly be the query_text and the ranking features of the result_ids corresponding to the results above the result_id in the Click event. The only difference from positive examples is that the label for these data points is 0, i.e. a non-click.

Training data

Doing what we did above we are able to generate in a scalable, and in exactly-once semantics (read Photon for reference) if needed, training data to update the ranking model.

Deepak Agarwal describes the above as “loose coupling between frontend and backend data tracking” in his tutorial on building real-life recommender systems.

Joining is also covered in Real-time data processing at Facebook.

Training serving skew

Is this necessary? Can there be another way? What if we don’t log the features? Could we have generated them later when we are modeling? Yes, but that introduces a risk knows as training-serving skew. That means we could be training models with very different features than they would have been if recorded at the time.

Quiz

Why is it important to log query_id in the click event?

Which process generates the query_id event and how would you make sure it is globally unique?

How does the Photon paper deliver exactly once semantics with this paradigm?

How would you want to relax exactly once, in an effort to make the ranking model learn real time? (an example of online learning is the ads ranking setup described here)

Hope this helps and come join us in learning together

If you want to discuss/teach/learn about applied ML, please join our Discord community here!

References

Photon: Fault-tolerant and scalable joining of continuous data streams

Tutorial: Lessons learned from building real-life recommender systems

Disclaimer: These are the personal opinions of the author. Any assumptions, opinions stated here are theirs and not representative of their current or any prior employer(s).