Entrypoint retention modeling in recommender systems

Choose/rank items at the entrypoint of a recommended feed to drive retention and not just consumption

In our previous post we shared our learnings on how to consider the risk of abandonment in a repeated recommender system. In this post, we focus on the initial recommendation, which we call the “entrypoint” item. We share our learnings on improved entrypoint recommendations, which in our experience has led to higher daily active users and app-sessions.

What do we mean by “entrypoint” recommendations

Carousel entrypoint: In many apps you will find either a carousel of options that opens up an immersive UI where you can scroll for more content. For example the Youtube Shorts carousel shown below.

Feed entrypoint: We have also had success applying these approaches to the first item in an interface where the user can swipe/scroll up to see more recommendations.

In both these situations the entrypoint item has a high impact on the value derived by the user from the session. Not getting it right can lead to shallow sessions and reduced current and future sessions.

The benefit of entrypoint retention modeling

Retention modeling in carousel entrypoint

If done right can lead to:

reduction in number of single item sessions, which means sessions that don’t advance beyond the item in the carousel

longer app-sessions

an increase in app-sessions and daily active users especially for infrequent (non power) users.

increased revenue caused by the effects above.

Retention modeling in feed entrypoint.

If done right can lead to:

reduction in “skip rate”, the rate at which users skip the recommended item.

longer app-sessions

an increase in app-sessions and daily active users especially for infrequent (non power) users.

increased revenue caused by the effects above.

Mathematical derivation

The cumulative value of a trajectory given the user is guaranteed to see the first recommendation “entrypoint” is Eq(1)

V(i) refers to the value derived from the i_th recommendation

exit(i) is 1 if the user exits the feed at the i_th recommendation

We will use this version, Eq(1), in the implementation section but before we dive into implementation it might help to look at the problem in a couple of ways so that you can connect it to what we discussed in our previous post of incorporating P(exit).

Eq(2) We can also write this in a way that separates the value derived from every point in the feed.

Another way to look at this is is Eq(3):

and in general the value starting the k_th position is the value from that recommendation and conditional on the user not exiting the value from the rest of the session starting at position (k+1)

Key Insight

Our key insights are:

Optimizing entrypoint purely by pointwise reward E[V(0)] ignores the second term in Eq(1).

Entrypoint recommendations can affect the value of the full session since, as shown in Eq(3), the recommendation directly affects the probability of exit and hence the second term.

Since you expect to have a higher impact of improved entrypoint recommendation on users who don’t yet have entrenched habits, it might help to limit your training data for these tasks to infrequent users.

In recommender systems that are responsive and alter what they show based on previous user interactions, entrypoint recommendations with good follow on recommendation options can capitalize on user intent generated by the entrypoint.

To account for the reduced causality between entrypoint and future terms, we can use a discounted sum of future rewards as opposed to the sum of future rewards. (See more in implementation set 2.a. below)

Recommended Implementation

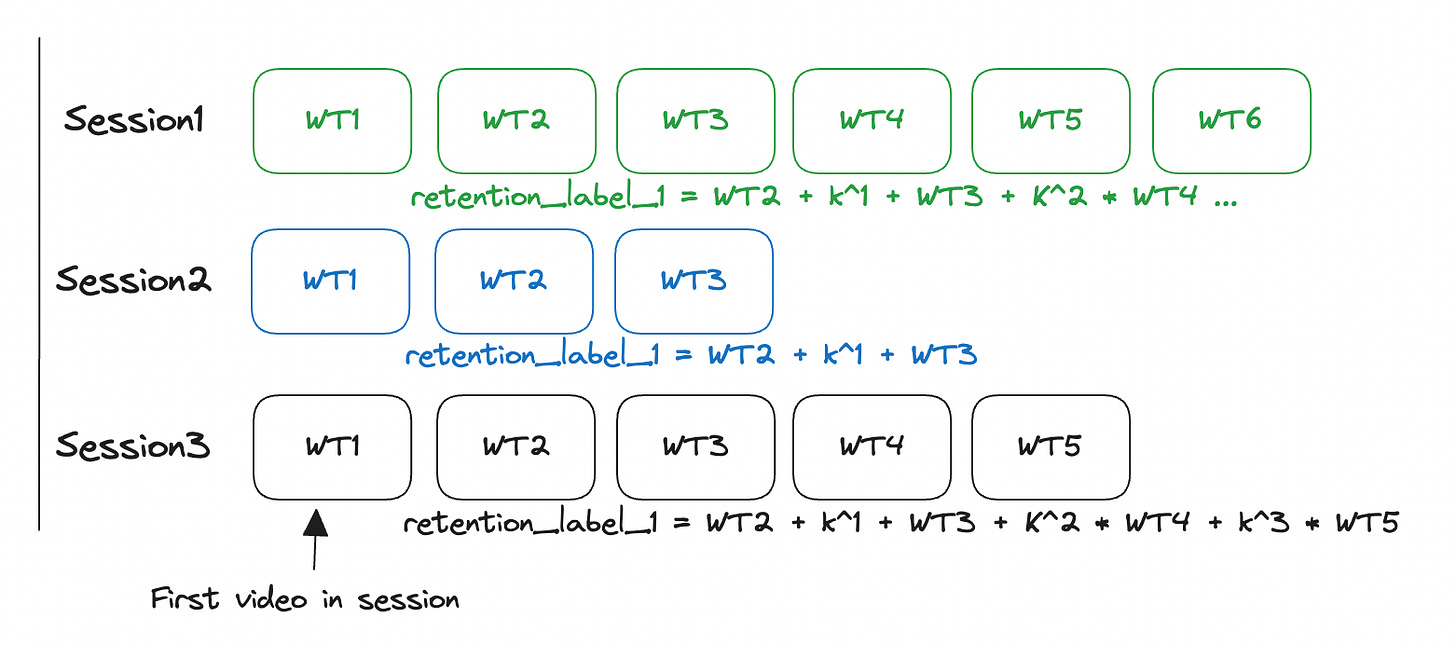

Join the user interaction at the entrypoint with the value for the rest of the session like time spent, number of items seen by the user, likes, etc.

We recommend keeping these downstream tasks separate in initial iteration like

retention_time_spent = discounted sum of time spent starting at position 1

\(V(\tau) = V(0) + \sum_{i=1}^{\infty} \left(\alpha^i * V(i)\right)\)retention_items_seen = discounted count of items seen starting position 1

retention_conversions etc.

Add these tasks to your Multi-task estimator (“ranking”) model.

Experiment with conditioning on the right user segment and perhaps on entrypoints where the immediate value to the user was strong enough.

Assuming you have a Multi-task fusion (a.k.a. “value model”) approach to combining your task estimates to actually pick the entrypoint item to recommend, in this step there will be a fair bit of iteration to use these new tasks.

Video explaining the post

Disclaimer: These are the personal opinions of the author(s). Any assumptions, opinions stated here are theirs and not representative of their current or any prior employer(s). Apart from publicly available information, any other information here is not claimed to refer to any company including ones the author(s) may have worked in or been associated with.