Personalized short-video recommender systems

To recap the previous two articles in this short-video recommendation series, part-1 "Using online learning in short-video recommendations” showed a great baseline solution that delivered fresh short video recommendations for potentially billions of users in under 50 milliseconds (P99) and part-2 “ML Design Interview | Design a short-video platform Part 2” approached it holistically describing what success means for all the three stakeholders : video-watchers, video-creators and the video-platform.

In this third and last part of this short-video recommender series, we will propose an ML model that caters to user-value, creator-value and platform-value.

Platform Value - Multi-category bucket-flows

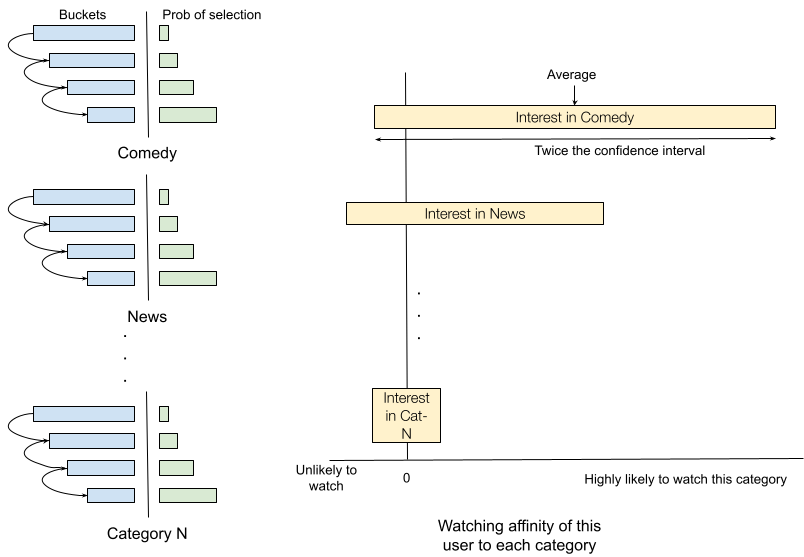

To simulate online learning at scale in part-1 we separated videos into buckets based on the uncertainty we have in estimating their goodness (watch-rate). See Fig 1 above. To address some of the shortcomings mentioned in “Further Improvements”, like personalization and videos of different categories might have different distributions of the watch-rate metric, we could use multiple bucket-sets. See Fig 2 below.

We have essentially replicated Fig 1 for each of the categories in our taxonomy. The only added step is that while serving recommendations to a user, we will need to select top K=3 categories for each user and there we could use online learning (UCB / Thompson Sampling) to learn the categories the user is interested in. (Ref: Deepmind video on online learning)

Can we do better?

What if videos don’t neatly fall into a category?

What if the initial categorization is not accurate?

What if the concepts / categories users are interested in changes over time?

These questions are not new and these are the ones that led recsys researchers to consider embeddings (Netflix prize) and their variational counterpart (to encode uncertainty). In the following section we will recap a model that maximizes user relevance.

User value - Maximizing relevance for the user

The current state of the art in recommender systems is the four-stage design as shown above. It optimizes for user-relevance and is constantly learning from interactions. Two key modeling components are:

a two-tower (dual-encoder) model trained to retrieve a set of about 100 videos for the given user1

a scoring model (historically known as “ranking model”) to estimate the probability of the user liking/watching/sharing the video.

During retrieval, items are ordered by Prob-Watch(user, video), which is estimated by the dot-product of the user interest embedding and the video embedding.

As shown above, during scoring (a.k.a. ranking) Prob-Watch and other metrics can be estimated as the output of neural networks.

Note that we can choose to use the creator_id as a feature or not. If we do use it then the ranking model can reward creators with past successes with more views. If we don’t use it intentionally as a feature then videos of all creators will have a similar chance at popularity on the platform.

The above image shows an example of the successful application of personalization on short video platforms. The platform was able to show a niche video (just 5 likes) to a user since it strongly matched the user’s interests.

Personalized embedding + Bucket flows

In Fig 7 above, we have improved upon Fig 2 using embedding training (Fig 4). During training, we learn not only the embeddings but also variance of the embeddings. On the video side, these uncertainty values helps us in putting them in the different buckets.

While serving, we could generate say 3 query vectors by sampling d[i] ~ U(0, 1) and vec[i] = user-embed + d[i] * stdev-user-embed. We could query the videos that have the highest dot-product with these query vectors.

If the variance of the user-embedding is high, we could also give a higher weight to diversity boosting.

Variational / Multi-modal user embeddings

There are two approaches commonly used to express in embeddings the fact that users have multiple interests. We talked about the variational approach in the previous section where we learn not just the user embedding but also the uncertainty along each dimension of the embedding.

Another approach is to learn multi-modal user embeddings, i.e. a small set of user embeddings that capture the different user interests. For instance, if Youtube sees my account watch Peppa Pig videos, Machine learning videos and NBA highlights, it could learn to represent me with three embeddings. (Ref: PinnerSage for more on this).

How to not rabbit-hole into a niche interest

This WSJ video describes the user experience of Tiktok, where it seems to learn quickly what the user wants to watch. However it seems to rabbit hole and go more and more in that direction. How can we avoid that? It is clear that this narrow watching intent is not sustainable, either for the user or the platform.

As mentioned in “Optimizing for the long term” section here, periodically trying to bring the user back towards broader interests, and discovering multiple interests of the user will help. While ranking videos, we could factor the inventory size around that video (platform won’t have too much inventory around very niche interests).

Now let’s come to the last and most crucial part of the short-video ecosystem, the creators.

Creator value - Maximizing # of active creators

Creators are incredibly valuable to a short-video platform, where a steady stream of high quality videos on a wide enough set of topics is what is needed to keep an engaged audience.

To maximize creator value, recommend videos to users such that it leads to more creation of videos. The platform should sponsor new creators in a unified ranking model.

The levers the platform has to incentivize video creation are:

Boost videos of new creators to users who are their target audience. Seeing their videos appreciated might incentivize more creation.

Show videos to potential creators who might use these to mimic and create more.

P(creator mimic v) can be modeled by a trainable neural network with both user and video inputs, similar to the scoring models in Fig 5 above.

Estimating the utility of a recommended view to a creator

The utility of a view to a creator derives from:

the probability of the user watching/liking the video

if they do like it then

the incremental utility of the watch/like to the creator’s interest in creating another video (due to the positive feedback provided by the platform).

Formally:

Creator-Utility-Uplift (user u, video v of creator c) =

P-create(c | v watched by u) * P-watch(video by user u) + P-create(c | v skipped by u) * P-skip(video v skipped by user u) - P-create(c)

The expression above measures how the probability of creation of video increases due to the recommendation.

Similarity with ads

Note how creator value model is similar to online ads modeling. For instance the utility model of an online ad is:

Utility to ad-bidder(bidder b, user u, ad a) = P-click(u, a) * Incremental-utility(b, u)

This is also similar to “people you may know” modeling since that involves two sided value as well.

Disclaimer: These are my personal opinions only. Any assumptions, opinions stated here are mine and not representative of my current or any prior employer(s).

References and further reading

Using online learning in short-video recommendations (Platform Value + MVP)

ML Design Interview | Design a short-video recommender system (Start with the user problems)

[Video] Four stage recommender system design (Even Oldridge)

Variational auto-encoders (modeling uncertainty of embeddings)

Two-tower models for recommendations (Efficient self-learning retrieval)

StarSpace - Embedding bipartite relationships using a dual encoder model

PinnerSage: Multi-modal user embedding framework for recommendations at Pinterest

Another option would be to have multiple stages of ranking. For instance the retrieval stage could start with say 2k results and we could put in 4 stages of ranking and pruning that finally result in about 30 videos or so. This approach was used in massive recommender systems like Facebook earlier since this is computationally efficient. However, the resulting set of results is not optimal as a set since we have been pruning results individual based on quality. Hence companies are movies to a two stage ranking: retrieval with say 2k results and then ranking (multi-dimensional scoring) and then diversity boosting. Read more here.